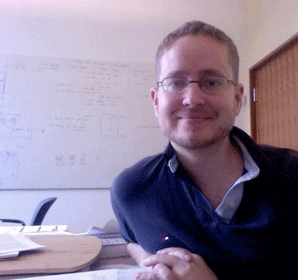

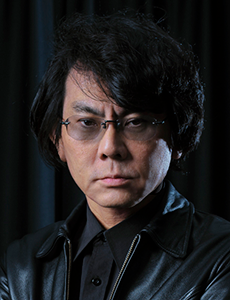

Frank Soong

Frank K. Soong, Principal Researcher/Research Manager

Speech Group, Microsoft Research Asia (MSRA)

Beijing, China

https://www.microsoft.com/en-us/research/people/frankkps/

Watch Video

A person’s speech is strongly conditioned by his own articulators and

the language(s) he speaks, hence rendering speech in an inter-speaker or

inter-language manner from a source speaker’s speech data collected in

his native language is both academically challenging and

technology/application desirable. The quality of the rendered speech is

assessed in three dimensions: naturalness, intelligibility and similarity

to the source speaker. Usually, the three criteria cannot be all met when

rendering is done in both cross-speaker and cross-language ways. We will

analyze the key factors of rendering quality in both acoustic and

phonetic domains objectively. Monolingual speech databases but recorded

by different speakers or bilingual ones recorded by the same speaker(s)

are used. Measures in the acoustic space and phonetic space are adopted

to quantify naturalness, intelligibility and speaker’s timber

objectively. Our “trajectory tiling” algorithm-based, cross-lingual TTS

is used as the baseline system for comparison. To equalize speaker

difference automatically, DNN-based ASR acoustic model trained speaker

independently is used. Kullback-Leibler Divergence is proposed to

statistically measure the phonetic similarity between any two given

speech segments, which are from different speakers or languages, in order

to select good rendering candidates. Demos of voice conversion, speaker

adaptive TTS, cross-lingual TTS will be shown either inter-speaker or

inter-language wise, or both. The implications of this research on

low-resourced speech research, speaker adaptation, “average speaker’s

voice”, accented/dialectical speech processing, speech-to-speech

translation, audio-visual TTS, etc. will be discussed.

Frank K. Soong is a Principal Researcher and Research

Manager, Speech Group, Microsoft Research Asia (MSRA), Beijing, China,

where he works on fundamental research on speech and its practical

applications. His professional research career spans over 30 years, first

with Bell Labs, US, then ATR, Japan, before joining MSRA in 2004. At Bell

Labs, where he worked on stochastic modeling of speech signals, optimal

decoding algorithm, speech analysis and coding, speech and speaker

recognition. He was responsible for developing the recognition algorithm

which was developed into voice-activated mobile phone products rated by

the Mobile Office Magazine (Apr. 1993) as the “outstandingly the best”.

He is a co-recipient of the Bell Labs President Gold Award for developing

the Bell Labs Automatic Speech Recognition (BLASR) system. He has served

as a member of the Speech and Language Technical Committee, IEEE Signal

Processing Society and other society functions, including Associate

Editor of the IEEE Speech and Audio Transactions and chairing IEEE

Workshop. He published extensively with more than 200 papers and

co-edited a widely used reference book, Automatic Speech and Speech

Recognition- Advanced Topics, Kluwer, 1996. He is a visiting professor of

the Chinese University of Hong Kong (CUHK) and a few other top-rated

universities in China. He is also the co-Director of the National

MSRA-CUHK Joint Research Lab. He got his BS, MS and PhD from National

Taiwan Univ., Univ. of Rhode Island, and Stanford Univ., all in

Electrical Eng. He is an IEEE Fellow “for contributions to digital

processing of speech”.